ECS Exec simplifies the process of running commands or obtaining an interactive shell within a container, similar to docker exec.

I perused the Using Amazon ECS Exec to access your containers on AWS Fargate and Amazon EC2 to understand its mechanics.

Here are my key takeaways:

Container Requirements:

To initiate an exec into a container successfully, it’s essential to have either netstat or heapdump utilities pre-installed in the container’s base image.

SSM Session Manager Integration:

ECS Exec leverages the AWS Systems Manager (SSM) Session Manager for secure connectivity between the device initiating the exec command and the target container. SSM agent binaries are bind-mounted into the container and started by the Fargate agent along with the application code.

Security Measures:

By default, communication between the client and the container is encrypted using TLS 1.2. Users can enhance security by leveraging their own AWS Key Management Service (KMS) keys for encrypting the data channel. IAM privileges must be granted to the ECS task to allow the SSM core agent to invoke the SSM service and establish a secure channel.

Logging Capabilities:

ECS Exec facilitates logging of commands and their output to:

- Amazon S3 bucket

- Amazon CloudWatch log group

The container image must include

scriptandcatutilities for uploading command logs to S3 and/or CloudWatch.

Audit Trail and Monitoring:

Shell sessions and command output are logged for auditing purposes. AWS CloudTrail records shell invocation details and command details as part of the ECS ExecuteCommand API Call. Task roles need appropriate IAM permissions to log output to S3 and/or CloudWatch.

However, I don’t know what utilities need to be pre-installed in the Microsoft Windows Containger images.

The above mentioned article dive into a practical example on how to get started with ecs-exec with aws-cli and covers how to get an interactive shell in an nginx container that is part of a running task on Fargate.

Deploy the resources

I’ve taken an additional step by attempting to accomplish it through Terraform. You can find the corresponding repository for this example here. In the upcoming sections, I’ll detail the Terraform approach I employed and share additional insights and lessons learned.

policies.tf

IAM roles allow resources to assume specific roles and inherit associated permissions. In the context of ECS, the ecs_exec_demo_task_execution_role and ecs_exec_demo_task_role roles are defined with trust policies enabling ECS tasks to assume these roles.

data "aws_iam_policy_document" "ecs_tasks_trust_policy" {

statement {

actions = ["sts:AssumeRole"]

principals {

type = "Service"

identifiers = ["ecs-tasks.amazonaws.com"]

}

}

}

resource "aws_iam_role" "ecs_exec_demo_task_execution_role" {

name = "ecs-exec-demo-task-execution-role"

assume_role_policy = data.aws_iam_policy_document.ecs_tasks_trust_policy.json

}

resource "aws_iam_role" "ecs_exec_demo_task_role" {

name = "ecs-exec-demo-task-role"

assume_role_policy = data.aws_iam_policy_document.ecs_tasks_trust_policy.json

}

The policies define the permissions granted to these roles. In our Terraform configuration, we attach predefined policies, such as AmazonECSTaskExecutionRolePolicy and custom policies tailored for logging, S3 access, and Key Management Service (KMS) decryption.

data "aws_iam_policy_document" "ecs_tasks_role_policy" {

statement {

actions = [

"ssmmessages:CreateControlChannel",

"ssmmessages:CreateDataChannel",

"ssmmessages:OpenControlChannel",

"ssmmessages:OpenDataChannel",

]

resources = [

"*",

]

}

statement {

actions = [

"logs:DescribeLogGroups",

]

resources = [

"*",

]

}

statement {

actions = [

"logs:CreateLogStream",

"logs:DescribeLogStreams",

"logs:PutLogEvents",

]

resources = [

"arn:aws:logs:${local.region}:${local.account_id}:log-group:${var.log_group}:*",

]

}

statement {

actions = [

"s3:PutObject",

]

resources = [

"arn:aws:s3:::${aws_s3_bucket.ecs_exec_output.bucket}/*",

]

}

statement {

actions = [

"s3:GetEncryptionConfiguration",

]

resources = [

"arn:aws:s3:::${aws_s3_bucket.ecs_exec_output.bucket}",

]

}

statement {

actions = [

"kms:Decrypt",

]

resources = [

"${aws_kms_key.ecs_exec.arn}",

]

}

}

resource "aws_iam_role_policy_attachment" "task_execution_role" {

role = aws_iam_role.ecs_exec_demo_task_execution_role.name

policy_arn = data.aws_iam_policy.ECSTaskExecutionRolePolicy.arn

}

resource "aws_iam_role_policy_attachment" "task_role" {

role = aws_iam_role.ecs_exec_demo_task_role.name

policy_arn = aws_iam_policy.ecs_tasks_role_policy.arn

}

logging.tf

We generate a random string to ensure uniqueness across resources, mitigating the risk of naming conflicts for S3 bucket. A random string is generated to be incoportated in the S3 bucket’s name to ensure uniqueness.

resource "random_string" "random" {

length = 10

special = false

}

resource "aws_s3_bucket" "ecs_exec_output" {

bucket = "ecs-exec-output-${lower(random_string.random.result)}"

depends_on = [

random_string.random

]

}

resource "aws_cloudwatch_log_group" "ecs_exec_output" {

name = var.log_group

}

output "bucket_name" {

value = aws_s3_bucket.ecs_exec_output.bucket

}

networking.tf

The aws_default_vpc resource creates the default VPC in the specified AWS region. This data “http” resource queries “https://ipconfig.io” to obtain details about the public IP address. This dynamic retrieval ensures that the security group allows traffic from the current external IP.

resource "aws_default_vpc" "default" {}

data "aws_subnet" "public1" {

id = var.subnet_public1_id

}

data "aws_subnet" "public2" {

id = var.subnet_public2_id

}

data "http" "my_ip" {

url = "https://ipconfig.io"

}

resource "aws_security_group" "ecs_exec" {

name = "ecs-exec"

vpc_id = aws_default_vpc.default.id

ingress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["${chomp(data.http.my_ip.response_body)}/32"]

}

egress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

ipv6_cidr_blocks = ["::/0"]

}

}

ecs.tf

We define an ECS cluster with a configuration block which contains an execute_command_configuration block. The execute_command_configuration block specifies settings for the ExecuteCommand feature in ECS.

The task definition uses the Fargate launch type and is configured to run a numbered containers.

A local variable container_definition specifies resource requirements, network mode (awsvpc), and other parameters, and includes a log configuration for the container, directing the logs to an AWS CloudWatch log group which is later encoded to JSON. Furthermore, we set the Linux Parameters if the operating system family is LINUX.

The service is associated with the ECS cluster created earlier and it enables the ExecuteCommand feature for the ECS service.

locals {

linux_parameters = startswith(upper(var.os_family), "LINUX") ? {

"initProcessEnabled" : true

} : {}

}

locals {

container_definition = {

name = var.container_name,

image = var.container_image,

command = split(" ", var.command)

entryPoint = split(" ", var.entryPoint)

logConfiguration = {

logDriver = "awslogs",

options = {

"awslogs-group" = var.log_group,

"awslogs-region" = data.aws_region.current.name,

"awslogs-stream-prefix" = "container-stdout"

}

},

linuxParameters = startswith(upper(var.os_family), "LINUX") ? local.linux_parameters : null,

}

}

resource "aws_ecs_cluster" "ecs_exec" {

name = var.cluster_name

configuration {

execute_command_configuration {

kms_key_id = aws_kms_key.ecs_exec.arn

logging = "OVERRIDE"

log_configuration {

cloud_watch_log_group_name = aws_cloudwatch_log_group.ecs_exec_output.name

s3_bucket_name = aws_s3_bucket.ecs_exec_output.bucket

s3_key_prefix = var.s3_key_prefix

}

}

}

depends_on = [

aws_ecs_task_definition.ecs_exec

]

}

resource "aws_ecs_task_definition" "ecs_exec" {

family = var.task_definition_name

network_mode = "awsvpc"

execution_role_arn = aws_iam_role.ecs_exec_demo_task_execution_role.arn

task_role_arn = aws_iam_role.ecs_exec_demo_task_role.arn

requires_compatibilities = ["FARGATE"]

cpu = var.task_cpu

memory = var.task_memory

runtime_platform {

operating_system_family = var.os_family

cpu_architecture = var.cpu_arch

}

container_definitions = jsonencode([local.container_definition])

}

resource "aws_ecs_service" "ecs_exec" {

name = var.service_name

cluster = aws_ecs_cluster.ecs_exec.id

task_definition = aws_ecs_task_definition.ecs_exec.arn

desired_count = var.instance_count

enable_execute_command = "true"

launch_type = "FARGATE"

network_configuration {

subnets = [

data.aws_subnet.public1.id,

data.aws_subnet.public2.id,

]

security_groups = [

aws_security_group.ecs_exec.id

]

assign_public_ip = "true"

}

depends_on = [

aws_ecs_task_definition.ecs_exec,

aws_ecs_cluster.ecs_exec,

]

}

outputs.tf

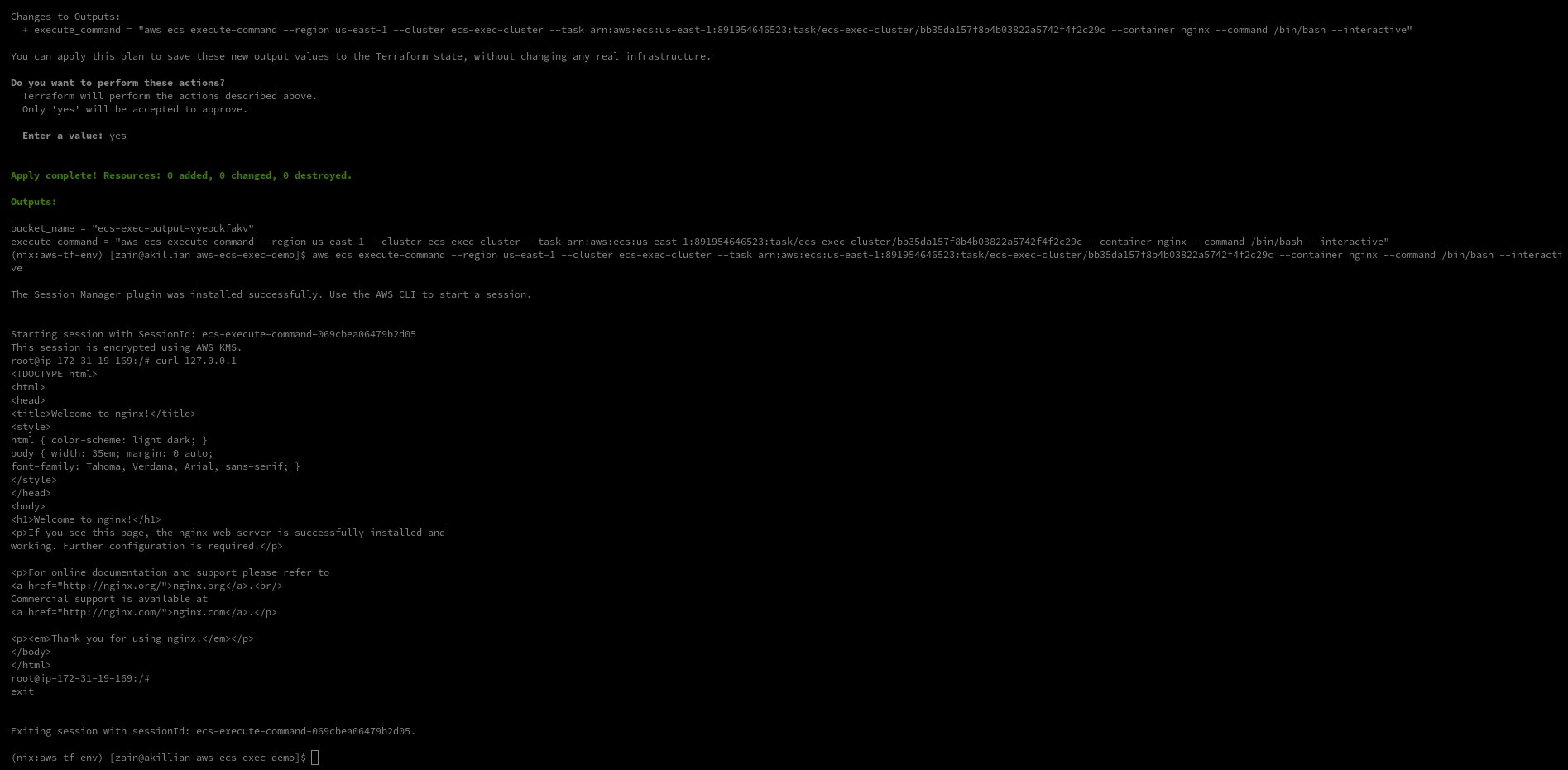

Here, we aim to automate the generation of an aws ecs execute-command command for interactive shell access to a specific container within an ECS task. The aws ecs execute-command differs slightly based on the OS. The ECS task ARN is dynamically retrieved by running an external Bash command using the AWS CLI and jq.

data "external" "task_arn" {

program = ["bash", "-c", "aws ecs list-tasks --cluster ${aws_ecs_cluster.ecs_exec.name} --output json | jq -r '.taskArns[]' | jq -nR '{task_arn: input}'"]

}

output "execute_command" {

value = startswith(upper(var.os_family), "LINUX") ? "aws ecs execute-command --region ${data.aws_region.current.name} --cluster ${aws_ecs_cluster.ecs_exec.name} --task ${data.external.task_arn.result.task_arn} --container ${var.container_name} --command /bin/bash --interactive" : "aws ecs execute-command --region ${data.aws_region.current.name} --cluster ${aws_ecs_cluster.ecs_exec.name} --task ${data.external.task_arn.result.task_arn} --container ${var.container_name} --command powershell.exe --interactive"

}

We can now connect remotely to a container running on AWS ECS using AWS CLI. The ssm-session-manager plugin must be installed on the client.

Below is a screenshot of a session:

Check the terraform.tfvars in the repo to set Windows Containers.

Resources

Introducing amazon ecs-exec to access your windows containers on amazon ec2 using amazon ecs exec access your containers fargate ec2